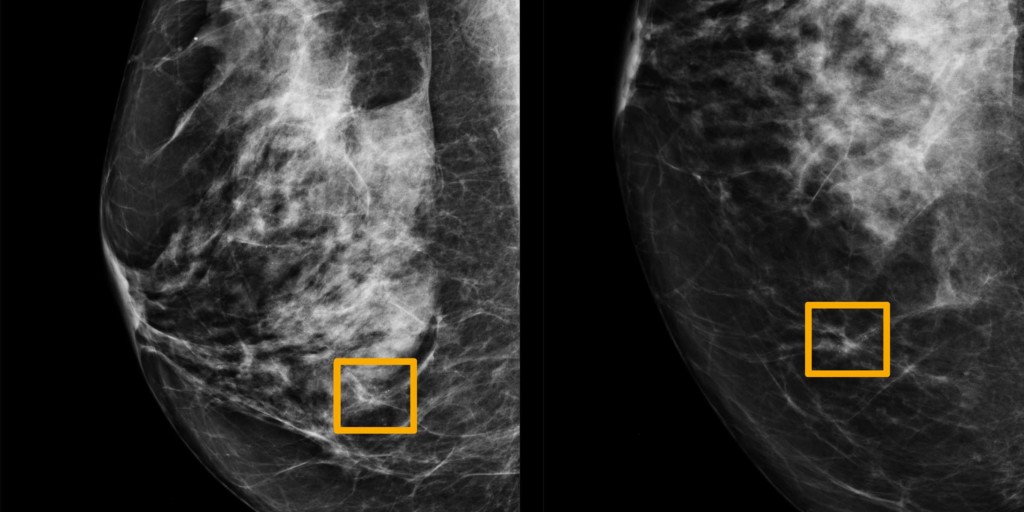

A yellow box indicates where an AI system found cancer hiding inside breast tissue (NorthWestern University)

Scott Mayer Mckinney (Google Health, Palo Alto, USA) and colleagues report in Nature that an artificial intelligence (AI) system was superior to human experts in predicting breast cancer (based on mammograms). They add that the findings of their study “highlight the potential of this technology to deliver screening results in a sustainable manner despite workforce shortages in countries such as the UK”.

Mckinney et al write that the interpretation of mammography, to detect breast cancer, “remains challenging”. “False positives can lead to patient anxiety, unnecessary follow-up and invasive diagnostic procedures. Cancers that are missed at screening may not be identified until they are more advanced and less amenable to treatment,” they explain. The authors add that AI “may be uniquely poised to help with this challenge”, noting that several studies have already shown AI to “meet or exceed the performance of human experts on several tasks of medical-image analysis”. Furthermore, given the shortage of mammography professionals “threatens the availability and adequacy of breast cancer screening services around the world”, Mckinney et al note that “scalability of AI could improve access to high-quality care for all”.

Therefore, in the study, they compared with results of a deep learning model (Deep Mind, Google) with those of retrospective datasets from the UK and the USA. In the UK dataset, compared with the first human reader, the AI demonstrated a significant improvement in absolute specificity of 1.2% (p=0.004) and an improvement of absolute sensitivity of 2.7% (p=0.0039). As the practice in the UK is for mammograms to be interpreted by two readers (with a potential third reader if there is disagreement over interpretation), Mckinney et al also compared the results of the deep learning programme with those of the second reader. This showed that the AI system was non-inferior to the second reader both in terms of specificity and sensitivity. With the US dataset, compared to the typical reader (in the USA, only one radiologist interprets mammograms), the AI system demonstrated significant improvements in both absolute specificity of 5.7% (p>0.001) and absolute sensitivity of 9.4% (p<0.0001).

To further evaluate the AI system, Mckinney et al explored whether the use of the system could reduce the need for a second reader in the UK (while preserving the standard of care). They treated the first reader’s decision as “final” (omitting the second reader and any ensuring arbitration) when the AI system agreed with the first reader. If the AI system reader did not agree with the first reader, the second reader and ensuring arbitration were taken into account. Mckinney et al report: “This combination of human and machine results in performance equivalent to that of the traditional double-reading process but saves 88% of the effort of the second reader”. Therefore, they add, “the AI system could also be used to provide automated, immediate feedback in the screening setting”

The authors write that their results “highlight the potential of this technology to deliver screening results in a sustainable manner despite workforce shortages in countries such as the UK. Prospective clinical studies will be required to understand the full extent to which this technology can benefit patient care”.

The use of AI in clinical practice

As Mckinney et al note, several studies have already shown that AI may be equal to, even better than, people at certain medical tasks (such as diagnosing disease). However, incorporating the use of AI systems in clinical practice may be difficult. Thomas Davenport (Babson College, Wellesley, USA) and Ravi Kalakota (Deloitte Consulting, New York, USA) write in Future Healthcare Journal (June 2019) that “scarcely a week goes by” without a study, such as Mckinney et al, showing that an AI system can “diagnose and treat a disease with equal or greater accuracy than human clinicians”. However, they add that probability-based medicine (with AI) “brings with it many challenges in medical ethics and patient/clinician relationships. Davenport et al explain that AI-based diagnosis and treatment recommendations “are sometimes challenging to embed in clinical workflows and electronic health records”, adding such integration issues “have probably been a greater barrier to broad implantation of AI than any inability to provide accurate and effective recommendations”. In terms of ethical considerations with using AI systems in medicine, they comment: “It is important that healthcare institutions, as well as governmental and regulatory bodies, establish structures to monitor key issues, react in a responsible manner and establish governance mechanisms to limit negativity implications”. Similarly, in Annals of Vascular Surgery (December 2019), Juliette Raffort (University Hospital of Nice, Nice, France) and others comment that while AI “is full of promise”, “there remains many challenges to face and unknowns to discover under the surface of the algorithmic iceberg”. “The involvement of surgeons and medical professionals in these technological changes is of utmost importance to guidance and help data scientists and industrialists to develop relevant applications and guarantee a safe and adapted use in clinical practice,” they add.

Whatever the challenges of AI, it does not seem to set to replace physicians anytime soon. Rather, as Raffort et al imply, AI will be a tool for physicians to use and, thus, not be a substitute for them. Davenport et al observe that the advent of AI may mean clinicians “move towards tasks and job designs that drawn on uniquely human skills like empathy, persuasion, and big-picture integration”. However, they comment: “Perhaps the only healthcare providers who will lose their jobs over time may be those who refuse to work alongside AI.”